One of the most common questions that I see in the community around Generative AI is whether specific models can be used for professional/commercial work, and what claims of “safe for commercial use” actually mean. These questions, coupled with uncertainty over Copyright law and other licensing issues, have led to a lot of confusion when trying to understand implications of using specific models for projects.

This post provides a non-legal, US-centric overview of Generative AI and claims around commercial safety, and discusses factors to consider when determining whether a model can safely be used for any particular project. It is not meant to be an exhaustive discussion of the topics, but can hopefully serve as a jumping-off point for going deeper in any specific area.

These views are my own, and not of my employer. I’m also not a lawyer, and nothing in this post should be considered legal advice.

The main takeaway is there is no legal definition of “safe for commercial use”, but it is used to indicate confidence that a model will not create content that infringes on licenses, including copyrights and trademarks. Looking across all of the factors to consider when choosing a model, the question ultimately comes down to risk, and how much you are willing to take. It is important to look at the needs of your project when considering whether any specific generative AI model is appropriate for it.

What does Safe for Commercial Use mean?

So, what does it mean when a company asserts that it’s generative AI models are “safe for commercial use”? There is no legal definition of “safe for commercial use”, so in order to explore this, we will need to look at some examples of claims of commercial safety, see how they are defined, and look for areas consistent across all of them.

Here is Adobe’s description of what it means by “safe for commercial use”:

We deploy safeguards at each step (prior to training, during generation, at prompt, and during output) to ensure Adobe Firefly models do not create content that infringes copyright or intellectual property rights and that it is safe to use for commercial and educational work.

In addition, Adobe provides intellectual property indemnification for enterprise customers for content generated with Adobe Firefly. Adobe Firefly Approach from Adobe

There are two things here.

First, Adobe discusses safeguards it takes to ensure its “models do not create content that infringes copyright or intellectual property rights”. Its statement for commercial safety appears to be based on its confidence that its model cannot create infringing content, even accidentally.

Secondly, they feel confident enough in the output, that they are willing to guarantee it by providing indemnification (at least for enterprises).

Looking at claims of commercial safety from Bria, you can see the same underlying reasoning, which revolves around the model not being able to be used to create infringing content. Bria also offers indemnification.

Finally, Getty images (which is “powered by Bria”) makes a similar claim:

Commercially safe Create confidently, knowing your AI‑generated images will not include recognizable characters, logos, and other IPs in the images it produces.

Getty also provides some indemnification.

Adobe, Bria and Getty all mention that their models are only trained on licensed content. However, while they mention how their models were trained, their indemnification (where they offer it) revolve around the output of the model.

From Bria:

Bria provides full indemnity against copyright infringement for all outputs generated by its models, for enterprise customers only.

And from Adobe:

The Adobe indemnity will cover claims that allege that the Firefly output directly infringes or violates any third party’s patent, copyright, trademark, publicity rights or privacy rights.

The claims of commercial safety revolve around the output of the models and the confidence that the models cannot generate infringing content.

But again, this isn’t a legal definition, so it is important that you look into the specifics for the model you are considering using.

As an aside, regarding indemnification, some model provides explicitly disclaim liability for any legal issues arising from content their models generated. For example, from the leonardo.ai (recently acquired by Canva) Terms of Service:

the Generated Content is provided “as is” without warranties of any kind, either expressed or implied, including but not limited to warranties of merchantability, fitness for a particular purpose, title or non-infringement. We disclaim all liability for any errors, omissions, or inaccuracies in the Generated Content, any infringement on third party rights (including Intellectual Property Rights) and any damages or losses that may arise from your use or reliance on such content.

Caveats

It’s important to note that the fact that a model is able to generate infringing content (and thus not safe for commercial use by the definition above), does not mean that ALL content that it generates will necessarily contain infringing content.

You could create non-infringing content with a model that can also create infringing content, but you cannot claim the model output is always safe for commercial use (based on the definitions above). For example, here is an image generated from reve.com (which can generate infringing content) with the prompt “a solid blue image”:

This image (probably) does not contain infringing content (and thus is probably ok to use in a commercial project), although it was generated from a model that is able to create infringing content.

Its a difference between “is always safe for commercial use” and “can sometimes be safe for commercial use”. This is an important distinction when evaluating generative AI models for use. The question “can the model be used for commercial work” potentially obscures that it might also be able to generate infringing content. It is important that you look at the details.

Basically, it boils down to, can I generate content from the model and be assured it will not generate content that infringes existing trademarks or copyrights?

Managing Risk

So, if “safe for commercial use” revolves around the output, and you can use any model and just make sure it doesn’t contain any infringing content, then why does “safe for commercial use” matter?

It all comes down to risk, and how much you, your client, or company/organization are willing to tolerate.

If you are an individual making something for yourself, you may have a high degree of confidence that you can check and ensure any generated content you use does not contain anything that infringes on copyright / trademarks / etc.

However, if you are a company or organization with multiple designers and/or contractors, you may not have as high a risk tolerance. You might not want to risk that something could slip through all the layers of people working on the project. The safest way to do that is to just mandate that only models that you know are safe for commercial use can be used.

Risk is further reduced in cases where indemnification is offered.

That is why the claim is important and useful. It creates clarity, helps remove and minimize risk, and can reassure stakeholders.

Other Factors to Consider

In addition to whether a model is safe for commercial use, there are other factors to consider when determining whether generative AI can or should be used for your projects.

These factors include:

- Copyright: Can the generated content be copyrighted?

- Ownership and Usage Rights: Who owns the generated content?

- Restrictions: What are the restrictions on what can be generated, or how it can be used?

- Input Assets: What are the rights conveyed on any content submitted to direct the generation?

- Deployment Requirements: Are there any requirements on how the project will be deployed related to the use of generative AI?

Copyright

While not specific to any one model, one of the most important considerations when determining whether generated content is safe for a project is whether the content needs to be copyrighted.

While we could spend an entire post just on the copyrightability of generative AI content, for our purposes, we will focus on just a couple of points.

All of the references below are from the United States Copyright Office Report on Copyright and Artificial Intelligence: Part 2 Copyrightability

Content Entirely Generated by AI

If a work is generated entirely by AI, the U.S. Copyright Office’s current position is that it cannot be registered for copyright.

if content is entirely generated by AI, it cannot be protected by copyright

Basically, if the AI is doing all of the generation, and the only human input is a prompt, the resulting image cannot be copyrighted.

Content Partially Generated by AI or that contains generated content

Adding generated content to a human-authored work does not affect the copyrightability of the overall work.

the inclusion of elements of AI-generated content in a larger human-authored work does not affect the copyrightability of the larger human-authored work as a whole. For example, a film that includes AI-generated special effects or background artwork is copyrightable, even if the AI effects and artwork separately are not.

While the individual elements that are generated by AI cannot be copyrighted on their own (such as the AI-generated special effects), their inclusion does not impact the ability to copyright the larger, human-created piece.

For example, if you use Generative Fill in Photoshop to generate and insert a cartoon character into a photograph you took, you probably won’t be able to copyright the generated cartoon character, but you should be able to copyright the entire piece, as well as the individual photograph.

Content that uses human-created content references

If you use an image as a reference to direct the generation (such as using a drawing as a guide), the elements that are clearly attributable to the human-authored reference are copyrightable.

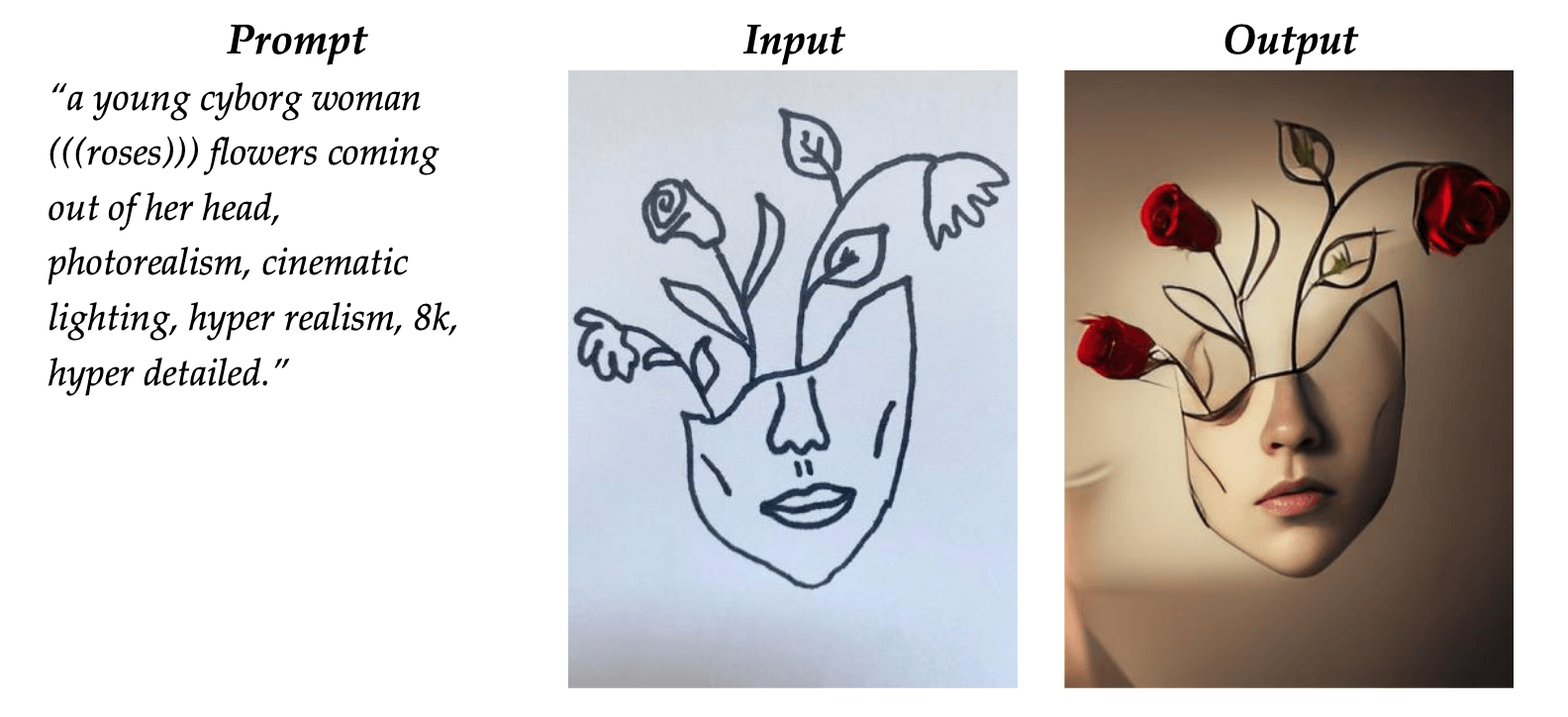

Example from United States Copyright Office Report on Copyright and Artificial Intelligence: Part 2 Copyrightability demonstrating how parts of the generated image can be copyrighted.

Example from United States Copyright Office Report on Copyright and Artificial Intelligence: Part 2 Copyrightability demonstrating how parts of the generated image can be copyrighted.

This was awarded a copyright by with the following comment:

Registration limited to unaltered human pictorial authorship that is clearly perceptible in the deposit and separable from the non-human expression that is excluded from the claim

Although exactly which elements are copyrightable may require review on a case by case basis.

Content inspired by or using generative AI as a reference

Finally, using generated content as a reference or inspiration to create something does not affect the ability to copyright the created work.

In these cases, the user appears to be prompting a generative AI system and referencing, but not incorporating, the output in the development of her own work of authorship. Using AI in this way should not affect the copyrightability of the resulting human-authored work

Whether all of this matters to any specific project depends on the project, how the content will be used and whether there is a need for the content to be copyrighted. If you need to create a logo, there is a higher risk of using generative AI, as you probably want licensing protections to prevent other people from using it. On the other hand, using generative AI for something like creating generic stock-like images for a website may not be as problematic as you may not be concerned if they are re-used.

EU Copyright Law

Copyright law regarding generative AI in the European Union is a bit more complicated due to a lack of EU level clarity, and differences in jurisdictions between member states and the EU.

In general and just as in the US, purely generated content with no human authorship generally cannot be copyrighted in the EU.

However, in the EU, how a model is trained may have an impact on whether it’s output can be copyrighted:

The origin of those images/input is crucial not only regarding the ownership of the copyrights generated, but to avoid potential right infringement of pre-existing works. In the case that the algorithm is trained with protected owned by a thid party (and protected via copyright, image rights or data protection) without authorisation or without licensing its content, the results may infringe on the rights of these third parties. European Commission : Artificial intelligence and copyright: use of AI tools to develop new content.

This European Commission article is a good overview of the current state of EU law in this space, and this EUIPO report and European Parliament Study provide more up-to-date overviews.

The key thing to remember is that there is no universal rule. You need to evaluate uses on a case by case basis looking at the requirements for your specific project.

Ownership and Usage Rights

Another key question is who controls the rights to the generated content? Specifically, do you own the output, can you sublicense it, or are you merely licensed to use it under the generating platform’s terms?

For example, Midjourney makes ownership conditional on your company’s size and subscription tier:

If you are a company or any employee of a company with more than $1,000,000 USD a year in revenue, you must be subscribed to a “Pro” or “Mega” plan to own Your Assets. From Midjourney’s Terms of Service

Why does this matter? Because if you don’t meet those conditions, you may not “own” the generated content in a way that lets you freely sublicense or use it. The platform itself may retain rights to use or even sublicense the same outputs, which could undercut your ability to control how the content is used and distributed.

Use Restrictions

Are there restrictions with the terms of use for the generative AI models that prohibit you from using the generated content in a way you may need to?

For example, Meta’s Terms of Service prohibit generating content that:

Generate, promote, disseminate, or otherwise use or facilitate adult content, such as erotic chat, pornography, and content meant to arouse sexual excitement From Meta AIs Terms of Service

Adobe prohibits generating content that “glorify graphic violence or gore”.

Both of these restrictions prevent usage across a number of legitimate, commercial industries.

In addition, some model providers’ terms of use may explicitly put limitations on usage for commercial projects. For example, Meta has some restrictions around commercial use from its LLama4 model:

Additional Commercial Terms. If, on the Llama 4 version release date, the monthly active users of the products or services made available by or for Licensee, or Licensee’s affiliates, is greater than 700 million monthly active users in the preceding calendar month, you must request a license from Meta, which Meta may grant to you in its sole discretion, and you are not authorized to exercise any of the rights under this Agreement unless or until Meta otherwise expressly grants you such rights. From the LLAMA 4 Community License Agreement

Another example is the FLUX.1-dev generative AI model, which has a license that makes their model available “solely for your Non-Commercial Purposes”.

Ensuring the model provider’s license terms are compatible with your project is vital.

Input Assets

What are the rights claimed by the model creator for any content (images, video, text) that you input to generate the content? If you upload a reference image, are you giving the model provider a license to use (or sublicense the use) of that image, and if so, how?

For example, Higgsfield AI claims a license to use input content (as well as generated output):

you acknowledge that Inputs (as well as the remainder of Your Content) and Outputs may be used by the Company to train, develop, enhance, evolve and improve its (and its affiliates’) AI models, algorithms and related technology, products and services (including for labeling, classification, content moderation and model training purposes), as well as for marketing and promotional purposes. From Higgsfield’s Terms of Use Agreement

Again, the specific requirements of your situation will determine whether it matters how reference input content may be used.

Deployment Requirements

Are there any limitations or requirements for how or where your project is deployed that may be impacted by the use of generative AI or specific models?

For example, when submitting a game to Steam, you must specify any use of generative ai, and affirm that it does not including any infringing content.

Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our pre-release review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises. … We will also include much of your disclosure on the Steam store page for your game, so customers can also understand how the game uses AI. AI Content on Steam

In this case, the use of generated content means you have additional obligations when deploying, and a specific need to assure you are not generating and using infringing content.

Checklist

So as you can see, there is a lot to consider when determining whether generative AI and output from a specific model can be safely used in any specific project.

Here is a checklist of questions to ask to help guide you:

- Does the provider claim its model’s output is “safe for commercial use”?

- Do they provide indemnification?

- Who owns the outputs? Do you need to sublicense the output?

- Does the provider reserve the right to reuse outputs you generate?

- Are there usage restrictions on the output?

- What happens to any assets used as inputs?

- Do you need to copyright the output?

- Is the generated content used on its own, or part of a larger piece of human-authored work?

- Are there requirements from how and where the project is being deployed related to the use of generative AI?

Legal Landscape

As I mentioned at the beginning, I am not a lawyer, and this is not a legal analysis (or legal advice).

The legal landscape around Generative AI is evolving, with the United States and European Union continuing to clarify guidance and laws around generative AI and copyright.

In addition, there are a number of active lawsuits that revolve around multiple issues, including what content the models were trained on, and around the content the models produce.

If you are using generative AI extensively in your projects, its probably a good idea to just keep an eye on the latest news and developments in this space.

Summary

When companies describe their model as “safe for commercial use”, they are generally indicating that they are confident (and often willing to guarantee) that their generative AI models cannot be used to generate content that infringes copyright and trademarks. However, this is not a legal definition or standard.

In addition, there are a number of other factors to consider, including copyright, ownership and usage rights, restrictions on use, input assets and project requirements when considering whether a generative AI model and its output are appropriate for any specific commercial project.

While this is not an exhaustive discussion, hopefully it provides a solid basis for understanding the issues, and a good starting point if you want to go deeper on the topic.