There has been a lot of excitement over the past week or so with the release of Google’s Gemini 2.5 Flash image model (Nano Banana). The model provides some of the best quality and ways to control the output (maybe the best we have seen thus far).

As usually happens with any big generative AI model release, a fresh wave of clickbaity “Photoshop is dead” posts has followed. This week was no different, though the claims were louder and more frequent, on par with the level of excitement around Gemini 2.5.

As someone who has a lot of experience with “X is dead” moments with Flash (most of which were wrong, except for when it wasn’t!), I have been thinking about this a lot this week, and wanted to share some of those thoughts.

So what’s the reality? Does the improvement in generative AI quality, combined with new modes of control beyond prompting, spell the end of Photoshop? Or is there a deeper misunderstanding of what Photoshop actually is? Does the rise of generative AI threaten Photoshop or make it more valuable than ever?

This post explores those questions and argues that in a world of increasingly powerful generative AI models, Photoshop becomes even more essential in helping creators stand out.

I work for Adobe. These views are my own.

What is the Reality today?

So what is the reality today? Can generative AI do everything Photoshop can, and if not, where are the gaps?

The short answer is no. While the gap has been decreasing, you still can’t do everything with a generative AI approach that you can in Photoshop. The core issues come down to control. With a generative AI flow, the AI is making the changes, compared to a Photoshop-based flow, where the user is making the edits. It’s this fundamental difference that allows the user to do more with Photoshop today.

So what are some of the things that you can do in Photoshop today, that you can’t do consistently with generative AI?

- Content fidelity: Make changes without unintentionally altering content, such as faces and fine details.

- Deterministic edits: Edits that produce the same, predictable result every time.

- Layer-based editing and composition: Blend modes, opacity, and masks, none of which generative AI outputs replicate structurally.

- Non-destructive editing: Adjustment layers, smart objects, masks, and other workflows that preserve flexibility.

- Pixel-level precision: Specific and precise edits and selections.

- True font usage and output: Editable text with full control over typefaces, layouts, kerning, tracking, and more (not just rasterized text).

- No content restrictions: Unlimited subject matter, without filters or blocked categories (e.g., NSFW). (Although possible with some models/implementations).

- Exact color matching: Consistent, precise color control across assets.

- Vector + raster in a single file: Combine both formats seamlessly.

- Print-optimized workflows: CMYK, spot colors, and prepress controls.

- Color management: Color profiles and cross-medium consistency.

That is not an exhaustive list, but should give a general sense in where the gap is. This video provides a good current comparison between Photoshop and Nano-Banana.

Now, it’s reasonable to expect that generative AI models will eventually be able to do some of these things. I can imagine models generating new file formats that support both raster and vector graphics, or outputs that include real, editable fonts. Color matching and control will likely improve, and some of today’s content restrictions may be relaxed or removed in the mass market models.

However, some things are inherently difficult in a generative AI-only workflow, especially tasks that require specific choices or precise selections, such as say editing a specific pixel.

These aren’t necessarily fundamental limitations of generative AI models, but are issues of UI and how you tell the AI what you want to do. Some tasks, like making a single-pixel change in a high-resolution image, are inherently more difficult to express through prompts than through a dedicated UI.

Pixels are more precise than prompts.

Of course, you could build controls on top of generative AI to provide more control and a higher level of precision. But at that point, the question isn’t whether generative AI is better than Photoshop, it’s whether you can build a better version of Photoshop.

So while generative AI can produce amazing outputs, it does not provide the precise control that’s often necessary, particularly in professional and artistic workflows. While this may improve in the future, it will almost certainly never provide the level of control that Photoshop does today (without essentially recreating something like Photoshop).

Photoshop is a Creative Operating System

The fundamental mistake people make when comparing a generative AI workflow to a Photoshop workflow is assuming they’re the same thing. They’re not. One generates an image, the other produces a system of inputs to control what the image is.

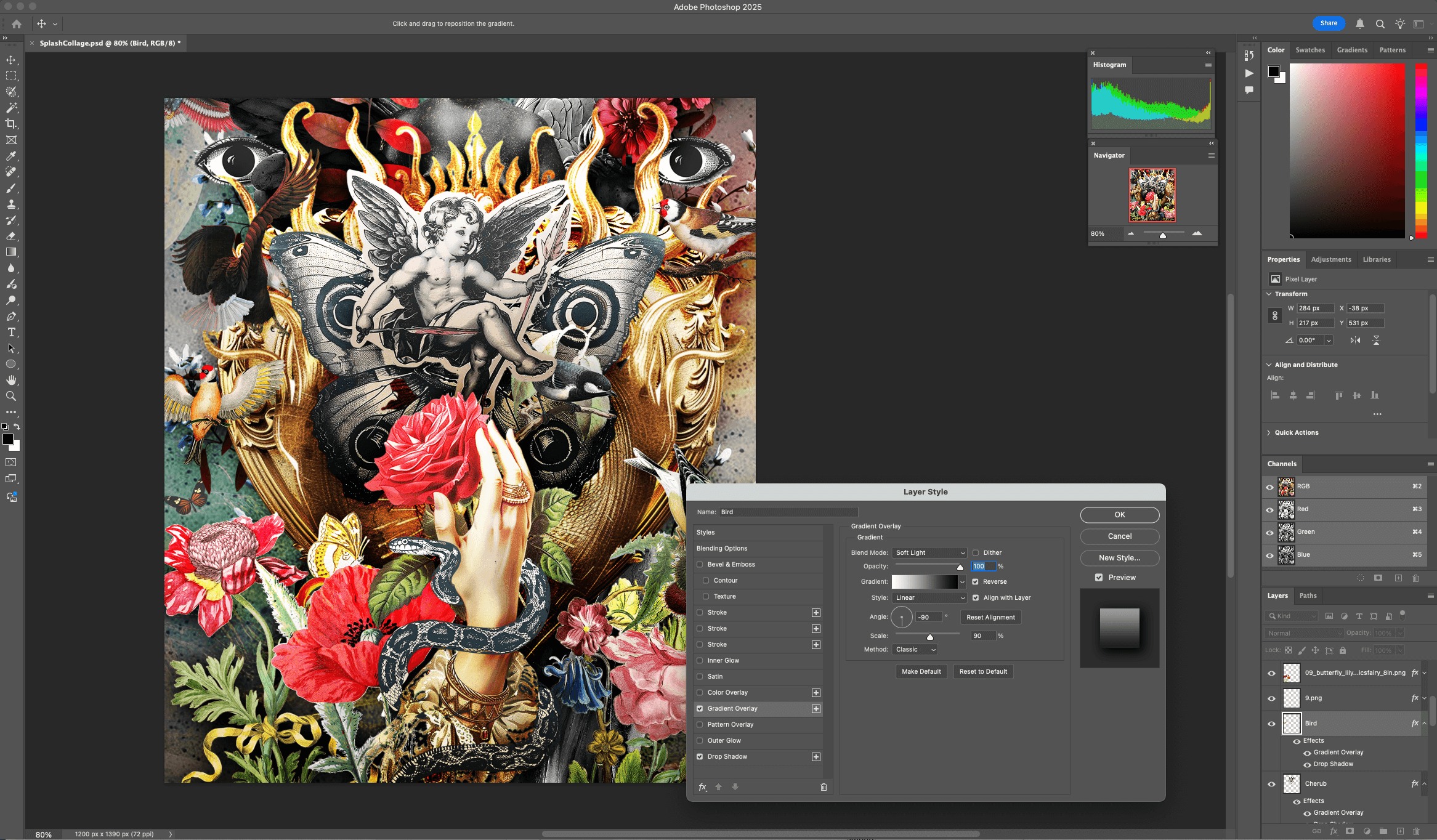

To see this, you need to rethink your views of what Photoshop is. Photoshop is an operating system for creating and running focused applications (PSDs) to control an image and its output. PSDs are essentially small, custom apps with controls that you build up (adjustment layers, masks, effects, etc…) for controlling a specific image.

Photoshop is both the IDE and runtime. PSD is the app.

Example of a PSD as an “app” with custom UI to control the image. (PSD from Paul Trani)

Example of a PSD as an “app” with custom UI to control the image. (PSD from Paul Trani)

Together they give you non-destructive, precise control over the output of the image, that you can come back to and “run” at any time to get the exact output you want.

That’s the point of Photoshop: not just to make an image, but to build a system that gives you full control over that image, now and in the future. This is what sets it apart.

This is in contrast to generative AI models, which produce assets. You can edit those assets or run more prompts, but the result is always just another output. There’s nothing built into the asset itself that provides detailed, specific control the way Photoshop does.

This is the key that sets Photoshop apart from a purely generative AI workflow. The virtually unlimited control it provides, and the ability to come back to it at any time (by opening the PSD) and controlling the image output in a known and consistent manner.

The Homogenization of Content

The main argument that generative AI is killing Photoshop is that it makes it much easier for anyone to create content that is “good enough”. And that is true! But the tradeoff is that the workflow has fundamental limits on how much control it offers.

Making it easier to create content that is “good enough” means that more people can and will create content that is “good enough”. That is not necessarily a bad thing in itself. However, the lower skill ceiling (relative to using Photoshop) means more people will be able push up against it, leading to a homogenization of content and making it more and more difficult for any individual to stand out.

Generative AI raises the floor but flattens the field.

Standing out in a World of “Good Enough”

In this new world, there are only a few ways to stand out (assuming the same level of creativity and vision).

The first is to completely master generative AI creation. With experience and creativity, it’s possible to produce amazing output. But given the relative lack of control and lower overall skill ceiling, there will be many more people pushing up against its limits. You may stand out, but it will be part of a much larger group that is also “standing out”.

The second way is to master a tool like Photoshop, which gives you absolute control. Whether you are starting from scratch or also using generative AI, working within Photoshop provides a much higher overall skill ceiling. This creates more headroom to separate yourself from the masses. The more you master Photoshop, the more space there is to stand apart from everyone else.

And because Photoshop can also leverage generative AI content, it will always extend that ceiling further than AI alone. Put simply, the best generative AI creator in the world will always be able to push their skill ceiling even higher by learning Photoshop.

At equal high skill levels, someone using Photoshop will consistently be able to create more expressive and refined content than someone using only generative AI. It doesn’t mean you can’t make great work with AI alone. But if you have a clear vision of what you want to create, Photoshop always gives you more control to realize it.

Photoshop is More Important Than Ever

If you say generative AI makes Photoshop obsolete, you’re comparing the wrong things. One generates an image. The other builds a system that gives you precise, repeatable control over that image. It’s like saying instant ramen makes a fully stocked kitchen obsolete; they are not the same thing.

If the claim is that generative AI plus new tools for control will replace Photoshop, you’re overlooking the obvious: the tool that already gives you the most control over generative AI outputs is Photoshop itself.

And if the claim is simply that AI lowers the bar so anyone can make something that looks “good enough”, that’s not new either. Tools like Adobe Express and Canva have already made it easier for anyone to that type of content. But the point of Photoshop was never just to make something that is “good enough.” It’s about pushing ideas further, building systems of layers and edits you can refine and revisit, and giving you the precision and control to make exactly what you want.

There will always be a need for control and for the ability to create precise, exacting outputs. There will always be people who want to push their creativity and vision, to tell a story, and to stand out. Photoshop is the standard for that kind of control, and that role becomes even more important with the rise of generative AI.